Most of these phenomena are present in conventional industries, but they are particular important for technology-intensive industries. I provide a survey and review of recent literature and examine some implications of these phenomena for corporate strategy and public policy.

During the 1990s there were three back-to-back events that stimulated investment in information technology: telecommunications deregulation in 1996, the ``year 2K'' problem in 1998-99, and the ``dot com'' boom in 1999-2000. The resulting investment boom led to a dramatic run-up of stock prices for information technology companies.

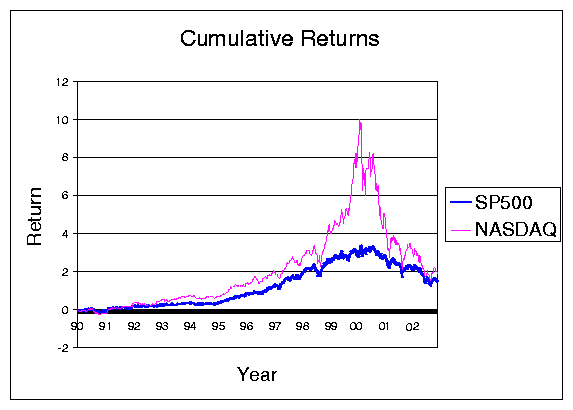

Many IT companies listed their stocks on NASDAQ. Figure 1 depicts the cumulative rate of return on the NASDAQ and the S&P500 during most of the 1990s. Note how closely the two indices track each other up until January of 1999, at which point NASDAQ took off on its roller coaster ride. Eventually it came crashing back, but it is interesting to observe that the total return on the two markets over the eight years depicted in the figure ended up being about the same.

Figure 1 actually understates the magnitude of technology firms on stock market performance, since a significant part of the S&P return was also driven by technology stocks. In December 1990, the technology component of the S&P was only 6.5 percent; by March, 2000, it was over 34 percent. By July 2001, it was about 17 percent.

A prominent Silicon Valley venture capitalist described the dramatic run-up in technology stocks as the ``greatest legal creation of wealth in human history.'' As subsequent events showed, not all of it was legal and not all of it was wealth.

But the fact that only a few companies succeeded capitalizing on the Internet boom does not mean that there was no social value in the investment that took place during 1999-2001. Indeed, quite the opposite is true. One can interpret Figure 1 as showing something quite different from the usual interpretation, namely, that competition worked very well during this period, so that much of the social gain from Internet technology ended up being passed along to consumers, leaving little surplus in the hands of investors.

Clearly the world changed dramatically in just a few short years. Email has become the communication tool of choice for many organizations. The World Wide Web, once just a scientific curiosum, has now become an indispensable tool for information workers. Instant messaging has changed the way our children communicate and is beginning to affect business communication.

Many macroeconomists attribute the increase in productivity growth in the late 1990s to the investment in IT during the first half of that decade. If this is true, then it is very good news, since it suggest we have yet to reap the benefits of the IT investment of the late 1990s.2

A major focus of this monograph is the relationship between technology and market structure. High-technology industries are subject to the same market forces as every other industry. However, there are some forces that are particularly important in high-tech, and it is these forces that will be our primary concern. These forces are not ``new.'' Indeed, the forces at work in network industries in 1990s are very similar to those that confronted the telephone and wireless industries in the 1890s.

But forces that were relatively minor in the industrial economy turn out to be critical in the information economy. Second-order effects for industrial goods are often first-order effects for information goods.

Take, for example, cost structures. Constant fixed costs and zero marginal costs are common assumptions for textbook analysis, but are rarely observed for physical products since there are capacity constraints in nearly every production process. But for information goods, this sort of cost structure is very common-indeed it is the baseline case. This is true not just for pure information goods, but even for physical goods like chips. A chip fabrication plant can cost several billion dollars to construct and outfit; but producing an incremental chip only costs a few dollars. It is rare to find cost structures this extreme outside of technology and information industries.

The effects I will discuss involve pricing, switching costs, scale economies, transactions costs, system coordination, and contracting. Each of these topics has been extensively studied in the economics literature. I do not pretend to offer a complete survey of the relevant literature, but will focus on relatively recent material in order to present a snapshot of the state of the art of research in these areas.

I try to refer to particularly significant contributions and other more comprehensive surveys. The intent is to provide an overview of the issues for an economically literate, but non-specialist, audience.

For a step up in technical complexity, I can recommend the survey of network industries in the Journal of Economic Literature consisting of articles by Katz and Shapiro [1994], Besen and Farrell [1994], Leibowitz and Margolis [1990], and the books by Shy [2001] and Vulkan [2003]. Farrell and Klemperer [2003] contains a detailed survey of work involving switching costs and network effects with an extensive bibliography.

For a step down in technical complexity, but with much more emphasis on business strategy, I can recommend Shapiro and Varian [1998a], which contains many real-world examples.

There is one major omission from this survey, and that is the role of intellectual property.

When speaking of information and technology used to manipulate information, intellectual property is a critical concern. Copyright law defines the property rights of the product being sold. Patent law defines the conditions that affect the incentives for, and constraints on, innovation in physical devices and, increasingly in software and business processes.

My excuse for the omission of intellectual property from this survey is that this topic is ably covered by my coauthor, David [2002]. In addition to this piece, I can refer the reader to the surveys by Gallini and Scotchmer [2001], Gallini [2002] and Menell [2000], and the reviews by Shapiro [2000],Shapiro [2001]. Samuelson and Varian [2002] describe some recent developments in intellectual property policy.

First we must confront the question of what happened during the late 1990s. Viewed from 2003, such an exercise is undoubtedly premature, and must be regarded as somewhat speculative. No doubt a clearer view will emerge as we gain more perspective on the period. But at least I will offer one approach to understanding what went on.

I interpret the Internet boom of the late 1990s as an instance of what one might call ``combinatorial innovation.''

Every now and then a technology, or set of technologies, comes along that offers a rich set of components that can be combined and recombined to create new products. The arrival of these components then sets off a technology boom as innovators work through the possibilities.

This is, of course, an old idea in economic history. Schumpeter [1934], p. 66 refers to ``new combinations of productive means.'' More recently, Weitzman [1998] used the term ``recombinant growth.'' Gilfillan [1935], Usher [1954], Kauffman [1995] and many others describe variations on essentially the same idea.

The attempts to develop interchangeable parts during the early nineteenth century is a good example of a technology revolution driven by combinatorial innovation.3 The standardization of design (at least in principle) of gears, pullies, chains, cams, and other mechanical devices led to the development of the so-called ``American system of manufacture'' which started in the weapons manufacturing plants of New England but eventually led to a thriving industry in domestic appliances.

A century later the development of the gasoline engine led to another wave of combinatorial innovation as it was incorporated into a variety of devices from motorcycles to automobiles to airplanes.

As Schumpeter points out in several of his writings (e.g., Shumpeter [2000]), combinatorial innovation is one of the important reasons why inventions appear in waves, or ``clusters,'' as he calls them.

... as soon as the various kinds of social resistance to something that is fundamentally new and untried have been overcome, it is much easier not only to do the same thing again but also to do similar things in different directions, so that a first success will always produce a cluster. (p 142)

Schumpeter emphasizes a ``demand-side'' explanation of cluster of innovation; one might also consider a complementary ``supply-side'' explanation: since innovators are, in many cases, working with the same components, it is not surprising to see simultaneous innovation, with several innovators coming up with essentially the same invention at almost the same time. There are many well-known examples, including the electric light, the airplane, the automobile, and the telephone.

A third explanation for waves of innovation involves the development of complements. When automobiles were first being sold, where did the paved roads and gasoline engines come from? The answer: the roads were initially the result of the prior decade's bicycle boom, and gasoline was often available at the general store to fuel stationary engines used on farms. These complementary products (and others, such as pneumatic tires) were enough to get the nascent technology going; and once the growth in the automobile industry took off it stimulated further demand for roads, gasoline, oil, and other complementary products. This is an example of an ``indirect network effect,'' which I will examine further in section 10.

The steam engine and the electrical engine also ignited rapid periods of combinatorial innovation. In the middle of the twentieth century, the integrated circuit had a huge impact on the electronics industry. Moore's law has driven the development of ever-more-powerful microelectronic devices, revolutionizing both the communications and the computer industry.

The routers that laid the groundwork for the Internet, the servers that dished up information, and the computers that individuals used to access this information were all enabled by the microprocessor.

But all of these technological revolutions took years, or even decades to work themselves out. As Hounshell [1984] documents, interchangeable parts took over a century to become truly reliable. Gasoline engines took decades to develop. The microelectronics industry took 30 years to reach its current position.

But the Internet revolution took only a few years. Why was it so rapid compared to the others? One hypothesis is that the Internet revolution was minor compared to the great technological developments of the past. (See, for example, Gordon [2000].) This may yet prove to be true-it's hard to tell at this point.

But another explanation is that the component parts of the Internet revolution were quite different from the mechanical or electrical devices that drove previous periods of combinatorial growth.

The components of the Internet revolution were not physical devices as all. Instead they were ``just bits.'' They were ideas, standards specifications, protocols, programming languages, and software.

For such immaterial components there were no delays to manufacturer, or shipping costs, or inventory problems,. Unlike gears and pulleys, you can never run out of HTML! A new piece of software could be sent around the world in seconds and innovators everywhere could combine and recombine this software with other components to create a host of new applications.

Web pages, chat rooms, clickable images, web mail, MP3 files, online auctions and exchanges ... the list goes on and on. The important point is that all of these applications were developed from a few basic tools and protocols. They are the result of the combinatorial innovation set off by the Internet, just as the sewing machine was a result of the combinatorial innovation set off by the push for interchangeable parts in the late eighteenth century munitions industry.

Given the lack of physical constraints, it is no wonder that the Internet boom proceeded so rapidly. Indeed, it continues today. As better and more powerful tools have been developed, the pace of innovation have even sped up in some areas, since a broader and broader segment of the population has been able to create online applications easily and quickly.

Twenty years ago the thought that a loosely coupled community of programmers, with no centralized direction or authority, would be able to develop an entire operating system, would have been rejected out of hand. The idea would have been just too absurd. But it has happened: GNU/Linux was not only created online, but has even become respectable and raised a serious threat to very powerful incumbents..

Open source is software is like the primordial soup for combinatorial innovation. All the components are floating around in the broth, bumping up against each other and creating new molecular forms, which themselves become components for future development.

Unlike closed-source software, open source allows programmers and ``wannabe programmers'' to look inside the black box to see how the applications are assembled. This is a tremendous spur to education and innovation.

It has always been so. Look at Josephson [1959]'s description of the methods of Thomas Edison:

``As he worked constantly over such machines, certain original insights came to him; by dint of may trials, materials long known to others, constructions long accepted were put together in a different way-and there you had an invention.'' (p. 91)

Open source makes the inner workings of software apparent, allowing future Edisions to build on, improve, and use existing programs-combining them to create something that may be quite new.

One force that undoubtedly led to the very rapid dissemination of the web was the fact that HTML was, by construction, open source. All the early web browsers had a button for ``view source,'' which meant that many innovations in design or functionality could immediately be adopted by imitators-and innovators-around the globe.

Perl, Python, Ruby, and other interpreted languages have the same characteristic. There is no ``binary code'' to hide the design of the original author. This allows subsequent users to add on to programs and systems, improving them and making them more powerful.

Each of the periods of combinatorial innovation referred to in the previous section was accompanied by financial speculation. New technologies that capture the public imagination inevitably lead to an investment boom: Sewing machines, the telegraph, the railroad, the automobile ... the list could be extended indefinitely.

Perhaps the period that bears the most resemblance to the Internet boom is the so-called ``Euphoria of 1923,'' when it was just becoming apparent that broadcast radio could be the next big thing.

The challenge with broadcast radio, as with the Internet, was how to make money from it. Wireless World, a hobbyist magazine, even sponsored a contest to determine the best business model for radio. The winner was ``a tax on vacuum tubes'' with radio commercials being one of the more unpopular choices.4

Broadcast radio, of course, set off its own stock market bubble. When the public gets excited about a new technology, a lot of ``dumb money'' comes into the stock market. Bubbles are a common outcome. It may be true that it's hard to start a bubble with rational investors-but not it's not that hard with real people.

Though billions of dollars were lost during the Internet bubble, a substantial fraction of the investment made during this period still has social value. Much has been made of the miles laid of ``dark fiber.'' But it's just as cheap to lay 128 strands of fiber as a single strand, and the marginal cost of the ``excess'' investment was likely rather low.

The biggest capital investment during the bubble years was probably in human capital. The rush for financial success led to a whole generation of young adults immersing themselves in technology. Just as it was important for teenagers to know about radio during the 1920s and automobiles in the 1950s, it was important to know about computers during the 1990s. ``Being digital'' (whatever that meant) was clearly cool in the 1990s, just as ``being mechanical'' was cool in the 1940s and 1950s.

This knowledge of, and facility with, computers will have large payoffs in the future. It may well be that part of the surge in productivity observed in the late 1990s came from the human capital invested in facility with spreadsheets and web pages, rather than the physical capital represented by PCs and routers. Since the hardware, the software, and the wetware-the human capital-are inexorably linked, it is almost impossible to subject this hypothesis to an econometric test.

As we have seen, the confluence of Moore's Law, the Internet, digital awareness, and the financial markets led to a period of rapid innovation. The result was excess capacity in virtually every dimension: compute cycles, bandwidth, and even HTML programmers. Al of these things are still valuable-they're just not the source of profit that investors once thought, or hoped, that they would be.

We are now in a period of consolidation. These assets have been, and will continue to be marked to market, to better reflect their true asset value-their potential for future earnings. This process is painful, to be sure, but not that different in principle from what happened to the automobile market or the radio market in the 1930s. We still drive automobiles and listen to the radio, and it is likely that the web-or its successor-will continue to be used in the decades to come.

The challenge now is to understand how to use the capital investment of the 1990s to improve the way that goods and services are produced. Productivity growth has accelerated during the latter part of the 1990s, and, uncharacteristically, continued to grow during the subsequent slump. Is this due to the the use of information technology? Undoubtedly it played a role, though there will continue to be debates about just how important it has been.

Now we are in the quiet phase of combinatorial innovation: the components have been perfected, the initial inventions have been made, but they have not yet been fully incorporated into organizational work practices.

David [1990] has described how the productivity benefits from the electric motor took decades to reach fruition. The real breakthrough came from miniaturization and the possibility of rearranging the production process. Henry Ford, and the entire managerial team, were down on the factor floor every day fine tuning the flow of parts through the assembly line as they perfected the process of mass production.

The challenge facing us now is to re-engineer the flow of information through the enterprise. And not only within the enterprise-the entire value chain is up for grabs. Michael Dell has shown us how direct, digital communication with the end user can be fed into production planning so as to perfect the process of ``mass customization.''

True, the PC is particularly susceptible to this form of organization, given that it is constructed from a relatively small set of standardized components. But Dell's example has already stimulated innovators in a variety of other industries. There are many other examples of of innovative production enabled by information technology that will arise in the future.

There are those that claim that we need a new economics to understand the new economy of bits. I am skeptical. The old economics-or at least the old principles-work remarkably well. Many of the effects that drive the new information economy were there in the old industrial economy-you just have to know where to look.

Effects that were uncommon in the industrial economy-like network effects, switching costs, and the like-are the norm in the information economy. Recent literature that aims to understand the economics of information technology is firmly grounded in the traditional literature. As with technology itself, the innovation comes not in the basic building blocks, the components of economic analysis, but rather the ways in which they are combined.

Let us turn now to this task of describing these ``combinatorial innovations'' in economic thinking.

Price discrimination is important in high tech industries for two reasons: first the high-fixed cost, low-marginal-cost technologies commonly observed in these industries often leads to significant market power, with the usual inefficiencies. In particular, price will often exceed marginal cost, meaning that the profit benefits to price discrimination will be very apparent to the participants.

In addition, information technology allows for fine-grained observation and analysis of consumer behavior. This allows for various kinds of marketing strategies that were previously extremely difficult to carry out, at least on a large scale. For example, a seller can offer prices and goods that are differentiated by individual behavior and/or characteristics.

This section will review some of the economic effects that arise from the ability to use more effective price discrimination.

In the most extreme case, information technology allows for a ``market of one,'' in the sense that highly personalized products can be sold at a highly personalized price. This phenomenon is also known as ``mass customization'' or ``personalization.''

Consumers can personalize their front page at many on-line newspapers and portals. They can buy a personally configured computer from Dell, and even purchase computer-customized blue jeans from Levi's. We will likely see more and more possibilities for customization of both information goods and physical products.

Amazon was accused of charging different prices to different customers depending on their behavior (Rosencrance [2000]), but they claimed that this was simply market experimentation. However, the ease with which one can conduct marketing experiments on the Internet is itself notable. Presumably companies will find it much more attractive to fine-tune pricing in Internet-based commerce, eliminating the so-called ``menu costs'' from the pricing decision. Brynjolfsson and Smith [1999] found that Internet retailers revise their prices much more often than conventional retailers, and that prices are adjusted in much finer increments.

The theory of monopoly first-degree price discrimination is fairly simple: firms will charge the highest price they can to each consumer, thereby capturing all the consumer surplus. However, it is clear that this is an extreme case. On-line sellers face competition from each other and from off-line sellers, so adding competition to this textbook model is important.

Ulph and Vulkan [2000],Ulph and Vulkan [2001] have examined the theory of first-degree price discrimination and product differentiation in a competitive environment. In their model, consumers differ with respect to their most desired products, and firms choose where to locate in product space and how much to charge each consumer. Ulph and Vulkan find that there are two significant effects: the ``enhanced surplus extraction effect'' and the ``intensified competition effect.'' The first effect refers to the fact that personalized pricing allows firms to charge prices closer to the reservation price for each consumer; the second effect refers to the fact that each consumer is a market to be contested. In one model they find that when consumer tastes are not dramatically different, the intensified competition effect dominates the surplus extraction effect, making firms worse off and consumers better off with competitive personalized pricing than with non-personalized pricing.

This is an interesting result, but their model assumes full information. Thus it leaves out the possibility that long-time suppliers of consumers know more about their customers than alternative suppliers. Sellers place much emphasis on ``owning the consumer.'' This means that they can understand their consumer's purchasing habits and needs better than potential competitors. Amazon's personalized recommendation service works well for me, since I have bought books there in the past. A new seller would not have this extensive experience with my purchase history, and would therefore offer me inferior service.

Of course, I could search on Amazon and purchase elsewhere, but there are other cases where free riding of this sort is not feasible. For example, a company called AmeriServe provides paper supplies to fast food stores. As a by-product, they found that their records about customer orders allowed them to provide better analysis and forecasts of their customers' needs than the customers themselves. Due to this superior information, AmeriServe was able to offer services to their customers such as recommended orders for restock. Such services were valuable to AmeriServe's customers, and therefore gave it an edge over competitive suppliers, allowing it to charge a premium for providing this service, either via a flat fee or via higher prices for their products.

Personalized pricing obviously raises privacy issues. A seller that knows its customers' tastes can sell them products that fit their needs better but it will also be able to charge more for the superior service.

Obviously, I may want my tailor, my doctor, and my accountant to understand my needs and provide me with customized services. However, it is equally obvious that I do not, in general, want them to share this information with third parties, at least without my consent. The issue is not privacy, per se, but rather trust: consumers want to control how information about themselves is used.

In economic terms, bilateral contracts involving personal information can be used to enhance efficiency, at least when transactions costs are low. But sale of information to third parties, without consumer consent, would not involve explicit contracting, and there is no reason to think it would be efficient. What is needed, presumably, are default contracts to govern markets in personal information. The optimal structure of these default contracts will depend on the nature of the transactions costs associated with various arrangements. I discuss these issues in more detail in Varian [1997].

Another issue relating to personalized pricing and mass customization is advertising. Many of the services that use personalization also rely heavily on revenue from advertising. Search engines, for example, charge significantly more for ads keyed to ``hot words'' in search queries since these ads are being shown to consumers who may find them particularly relevant.

Google, for example, currently has over 100,000 advertisers who bid on keywords and phrases. When a user searches for information on one of these keywords, the appropriate ads are shown, where the bids are used to influence the position in which the ads appear.

Second-degree price discrimination refers to a situation where everyone faces the same menu of prices for a set of related products. It is also known as ``product line pricing,'' ``market segmentation,'' or ``versioning.'' The idea is that sellers use their knowledge of the distribution of consumer tastes to design a product line that appeals to different market segments.

This form of price discrimination is, of course, widely used. Automobiles, consumer electronics, and many other products are commonly sold in product lines. We don't normally think of information goods as being sold in product lines but, upon reflection, it can be seen that this is a common practice. Books are available in hardback or paperback, in libraries, and for purchase. Movies are available in theaters, on airplanes, on tape, on DVD, and on TV. Newspapers are available on-line and in physical form. Traditional information goods are very commonly sold in different versions.

Information versioning has also been adopted on the Internet. To choose just one example, 20-minute delayed stock prices are available on Yahoo free of charge, but real-time stock quotes cost $9.95 a month. In this case, the providers are using ``delay'' to version their information.

Information technology is helpful in both collecting information about consumers, to help design product lines, and in actually producing the different versions of the product itself. See Shapiro and Varian [1998a],Shapiro and Varian [1998b], and Varian [2000] for an analysis of versioning.

The basic problem in designing a product line is ``competing against yourself.'' Often consumers with high willingness to pay will be attracted by lower-priced products that are targeted towards consumers with lower willingness to pay. This ``self-selection problem'' can be solved by lowering the price of the high-end products, by lowering the ``quality'' of the low-end products, or by some combination of the two.

Making the quality adjustments may be worthwhile even when it is costly, raising the peculiar possibility that the low-end products are more costly to produce than the high-end products. See Deneckere and McAfee [1996] for a general treatment and Shapiro and Varian [1998a] for applications in the information goods context.

Varian [2000] analyzes some of the welfare consequences of versioning. Roughly speaking, versioning is good in that it allows markets to be served that would otherwise not be served. This is the standard output-enhancing effect of price discrimination described in Schmalensee [1981b] and Varian [1985]. However, the social cost of versioning is the quality reduction necessary to satisfy the self-selection constraint. In many cases the output effect appears to outweigh the quality reduction effect, suggesting that versioning is often welfare-enhancing.

Versioning is being widely adopted in the technology-intensive information goods industry. Intuit sells three different versions of their home accounting and tax software, Microsoft sells a number of versions of their operating systems and applications software, and even Hollywood has learned how to segment audiences for home video. The latest trend in DVDs is to sell a ``standard'' version for one price and an enhanced ``collectors edition'' for five to ten dollars more. The more elaborate version contains outtakes, director's commentary, storyboards and the like. This gives the studios a way to price discriminate between collectors and casual viewers, and between buyers and renters. Needless to say, the price differences between the two versions is much greater than the difference in marginal cost.

Third degree price discrimination is selling at different prices to different groups. It is, of course, a classic form of price discrimination and is widely used.

The conventional treatment examines monopoly price discrimination, but there have been some recent attempts to extend this analysis to the competitive case. Armstrong and Vickers [2001] present a survey of this literature, along with a unified treatment and a number of new results. In particular they observe that when consumers have the essentially the same tastes, and there is a fixed cost of servicing each consumer, then competitive third-degree price discrimination will generally make consumers better off. The reason is that competition forces firms to maximize consumer utility, and price discrimination gives them additional flexibility in dealing with the fixed cost. If there are no fixed costs, consumer utility falls with competitive third-degree price discrimination, even though overall welfare (consumer plus producer surplus) will still rise.

With heterogeneous consumers, the situation is not as clear. Generally consumer surplus is reduced and profits are enhanced by competitive price discrimination, so welfare may easily fall.

Another form of price discrimination that is of considerable interest in high-tech markets is price discrimination based on purchase history. Fudenberg and Tirole [1998] investigate models where a monopolist can discriminate between old and new customers by offering upgrades, enhancements, and the like. Fudenberg and Tirole [2000] investigate a duopoly model which adds an additional phenomenon of ``poaching:'' one firm can offer a low-ball price to steal another's customers. These results are extended by Villas-Boas [1999] and Villas-Boas [2001].

Acquisti and Varian [2001] examine a simple model with two types of consumers: high-value and low-value, in which a monopolist can commit to a price plan. They find that although a monopolistic seller is able to make offers conditional on previous purchase history, it is never profitable for it to do so, which is consistent with the earlier analysis of intertemporal price discrimination by Stokey [1979] and Salant [1989].

However, Acquisti and Varian [2001] also show that if the monopolist can offer an enhanced service such as one-click shopping or recommendations based on purchase history, it may be optimal to condition prices on earlier behavior and extract some of the value from this enhanced service.

One interesting effect of the Internet is that it can lower the cost of search quite dramatically. Even in markets where there are relatively few direct transactions over the Internet, such as automobiles, consumers appear to do quite a bit of information gathering before purchase.

There are many shopping agents that allow for easy price comparisons. According to Yahoo, mySimon, BizRate, PriceScan, and DealTime are among the most popular of these services. What happens when some of the consumers use shopping agents and others shop at random? This question has been addressed by Greenwald and Kephart [1999], Baye et al. [2001], Baye and Morgan [2001] and others. The structure of the problem is similar to that of Varian [1980], and it is not surprising that the solution is the same: sellers want to use a mixed strategy and randomize the prices they charge. This allows them to sometimes charge low prices so as to compete for the searchers and still charge, on average, a high price to the non-searchers. In my 1980 paper I interpreted this randomization as promotional sales; in the Internet context it is better seen as small day-to-day fluctuations in price. Baye et al. [2001] and Brynjolfsson and Smith [1999] show that on-line firms do engage in frequent small price adjustments, similar to those predicted by the theory. Janssen and Moraga-González [2001] examine how the equilibrium changes as the intensity of search changes in this sort of model.

One reason that more people don't use ``shopbots'' may be that they do not trust the results. Ellison and Ellison [2001] have found that it is common for online retailers to engage in ``bait and switch'' tactics: they will advertise an inferior version of a product (i.e., an obsolete memory chip) in order to attract users to their site. Such obfuscation may discourage users from shopbots, leading to the kind of price discrimination described above.

Bundling refers to the practice of selling two or more distinct goods together for a single price. (Adams and Yellen [1976].) This is particularly attractive for information goods since the marginal cost of adding an extra good to a bundle is negligible. There are two distinct economic effects involved: reduced dispersion of willingness to pay, which is a form of price discrimination, and increased barriers to entry, which is a separate issue.

To see how the price dispersion story works, consider a software producer who sells both a word processor and a spreadsheet. Mark is willing to pay $120 for the word processor and $100 for the spreadsheet. Noah is willing to pay $100 for the word processor and $120 for the spreadsheet.

If the vendor is restricted to a uniform price, it will set a price of $100 for each software product, realizing revenue of $400.

But suppose the vendor bundles the products into an ``office suite.'' If the willingness to pay for the bundle is the sum of the willingness to pay for the components, then each consumer will be willing to pay $220 for the bundle, yielding a revenue of $440 for the seller.

The enhanced revenue is due to the fact that bundling has reduced the dispersion of willingness to pay: essentially it has made the demand curve flatter. This example is constructed so that the willingnesses to pay are negatively correlated, so the reduction is especially pronounced. But the Law of Large Numbers tells us that unless a number of random variables are perfectly correlated, summing them up will tend to reduce relative dispersion, essentially making the demand curve flatter.

Bakos and Brynjolfsson [1999],Bakos and Brynjolfsson [2000],Bakos and Brynjolfsson [2001] have explored this issue in considerable detail and show that bundling significantly enhances firm profit and overall efficiency, but at the cost of a reduction in consumer surplus. They also note that these effects are much stronger for information goods than for physical goods, due to the zero marginal cost of information goods.

Armstrong [1999] works in a somewhat more general model which allows for correlated tastes. He finds that an ``almost optimal'' pricing system can be implemented as a menu of two-part tariffs, with the variable part of the pricing proportional to marginal costs.

Whinston [1990], Nalebuff [1999],Nalebuff [2000] and Bakos and Brynjolfsson [2000] examine the entry deterrent effect of bundling. To continue with the office suite example, consider a more general situation where there are many consumers with different valuations for word processors and spreadsheets. By selling a bundled office suite, the monopoly software vendor reaches many of those who value both products highly and some of those who value only one of the products highly.

If a competitor contemplates entering either market, it will see that its most attractive customers are already taken. Thus it finds that the residual demand for its product is much reduced-making entry a much less attractive strategy.

In many cases the only way a potential entry could effectively compete would be to offer a bundle with both products. This not only increases development costs dramatically, but it also makes competition very intense in the suite market-a not so sweet outcome for the entrant. When Sun decided to enter the office suite market with StarOffice, a competitor for Microsoft Office, they offered the package at a price of zero, recognizing that it would take such a dramatic price to make headway against Microsoft's imposing lead.

When you switch automobiles from Ford to G.M., the change is relatively painless. If you switch from Windows to Linux, it can be very costly. You may have to change document formats, applications software, and, most importantly, you will have to invest substantial time and effort in learning the new operating environment.

Changing software environments at the organizational level is also very costly. One study found that the total cost of installing an Enterprise Resource Planning (ERP) system such as SAP was eleven times greater than the purchase price of the software due to the cost of infrastructure upgrades, consultants, retraining programs, and the like.

These switching costs are endemic in high-technology industries and can be so large that switching suppliers is virtually unthinkable, a situation known as ``lock-in.''

Switching costs and lock-in has been extensively studied in the economics literature. See, for example, Klemperer [1987], Farrell and Shapiro [1989], Farrell and Shapiro [1988], Beggs and Klemperer [1992], and Klemperer [1995]. The last work is a particularly useful survey of earlier work. Shapiro and Varian [1998a] examine some of the business strategy implications of switching costs and lock-in.

Consider the following simple two-period model, adopted from Klemperer [1995]. There are n consumers, each of whom is willing to pay v per period to buy a non-durable good. There are two producers that produce the good at a constant identical marginal cost of c. The producers are unable to commit to future prices.

In order to switch consumption from one firm to the other, a consumer must pay a switching cost s. We suppose v ³ c, but v+s < c, so that it pays each consumer to purchase the good but not to switch.

The unique Nash equilibrium in the second period is for each firm to set its price to the monopoly price v, making profit of v-c. The seller can extract full monopoly profit second period, since the consumer are ``locked-in,'' meaning that their switching costs are so high that the competitive seller is unable to offer them a price sufficiently low to induce them to switch.

The determination of the first-period price will be discussed below, after we consider a few real-world examples.

When switching costs are substantial, competition can be intense to attract new customers, since, once they are locked in, they can be a substantial source of profit. Everyone has had the experience of buying a nice ink jet printer for $150 only to discover a few months later that the replacement cartridges cost $50. The notable fact is not that the cartridges are expensive, but rather that the printer is so cheap. And, of course, the printer is so cheap because the cartridges are so expensive. The printer manufacturers are following the time-tested strategy of giving away the razor to sell the blades.

Business Week reports that in 2000, HP's printer supply division made an estimated $500 million in operating profit on sales of $2.4 billion. The rest of HP's businesses lost $100 million on revenues of $9.2 billion. The inkjet cartridges reportedly have over 50% profit margins. (Roman [2001])

In a related story, Cowell [2001] reports that SAP's profits rose by 78 percent in the second-quarter of 2001, even in the midst of a widespread technology slump. As he explains, ``... because SAP has some 14,000 existing customers using its products, it is able to sell them updated Internet software...''

Ausubel [1991] and Kim et al. [2003] examine switching costs in the credit card and bank loan markets and find that they are substantial: in the bank loan case, they appear to amount to about a third of the average interest rate on loans.

Chen and Hitt [2001] use a random utility model to study switching costs for online brokerage firms. They find that breadth of product offering is the single best explanatory variable in their model, and that demographic variables are not very useful predictors. This is important since breadth of product offerings is under control of the firm; if a variety of products can be offered at a reasonable cost, then it should help in reducing the likelihood of customer switching.

As these examples illustrate, lock-in can be very profitable for firms. It is not obvious that switching costs necessarily reduce consumer welfare, since the competition to acquire the customers can be quite beneficial to consumers. For example, consumers who use their printers much less than average are clearly made better off by having a low price for printers, even though they have to pay a high price for cartridges.

The situation may be somewhat different for companies like SAP, Microsoft, or Oracle. They suffer from the ``burden of the locked-in customers,'' in the sense that they would like to sell at a high price to their current customers (on account of their switching costs) but would also like to compete aggressively for new customers, since they will remain customers for a long time and contribute to future profit flows. This naturally leads such firms to want to price discriminate in favor of new customers, and such strategies are commonly used.

Though he acknowledges that in many cases welfare may go either way, Klemperer [1995] concludes that switching costs are generally bad for consumer welfare: they typically raise prices over the lifetime of the product, create deadweight loss, and reduce entry.

Return to the model of section 6.1. Suppose for simplicity that the discount rate is zero, so that the sellers only care about the sum of the profit over the two periods. In this case, each firm would be willing to pay up to v-c to acquire a customer.

Bertrand competition pushes the present value of the profit of each firm to zero, yielding a first period price of 2c-v. The higher the second-period monopoly payoff, the smaller the first period price will be, reflecting the result of the competition to acquire the monopoly.

If we assumed the goods were partial substitutes, rather than perfect substitutes, we would get a less extreme result, but it is still typically the case that the first-period price is lower because of the second-period lock-in. See Klemperer [1989] and Klemperer [1995] for a detailed analysis of this point.

It is worth noting that the conclusion that first-period prices are lower due to switching costs depends heavily on the assumption that the sellers cannot commit to second-period prices. If the sellers can commit to second period prices, the model collapses to a one-period model, where the usual Bertrand result holds. In the specific model discussed here, the price for two periods of consumption would be competed down to 2c.

One common example of switching costs involves specialized supplies, as with inkjet printer cartridges. In this example, the switching cost is the purchase of a new printer. The market is competitive ex ante , but since cartridges are incompatible, it is monopolized ex post .

This situation can also be viewed as a form of price discrimination. The consumer cares about the price of the printer plus the price of however many cartridges the consumer buys. If all consumers are identical, a monopolist that commit to future prices would set the price of the cartridges equal to their marginal cost and use its monopoly power on the printer. This is just the two-part tariff result of Oi [1971] and Schmalensee [1981a].

Suppose now that there are two types of customers, those with high demand and low demand. Let p be the price of cartridges, c their marginal cost, xH(p) be the demand function of the high-demand type and xL(p) the demand of the low-demand type. Let vL(p) be the indirect utility of the low-demand type. Then the profit maximization problem for the monopolist is

|

|

What is happening here is that the users distinguish their type by the amount of their usage, so the seller can price discriminate by building in a positive price-cost margin on the usage rather than the initial purchase price.

We have already noted that many information and technology-related businesses have cost structures with large fixed costs and small, or even zero, marginal costs. They are, to use the textbook term, ``natural monopolies.'' The solution to natural monopolies offered in many textbooks is government regulation. But regulation offers its own inefficiencies, and there are several reasons why the social loss from high fixed cost, low marginal cost industries may be substantially less than is commonly believed.

First, competition in the real world is much more dynamic than in the textbook examples. The textbook analysis starts with the existence of a monopoly, but rarely does it examine how that monopoly came about.

If the biggest firm has the most significant cost advantages, firms will compete intensively to be biggest, and consumers will benefit from that competition, as described in section 6.2. Amazon believed, rightly or wrongly, that scale economies were very important in on-line retailing, and consumers benefited from the low prices it charged while it was trying to build market share.

Third, information technology has reduced fixed costs and thus the minimum efficient scale of operation in many markets. Typography and page layout used to be tasks that only experts could carry out; now anyone with a $1000 computer can accomplish reasonably professional layout. Desktop publishing has led to an explosion of new entrants in the magazine business. (Of course it is also true that many of these entrants have been subsequently acquired due to other economies of scope and scale in the industry; see Kuczynski [2001].)

The same thing will happen to other content industries, such as movie making, where digital video offers very substantial cost reductions and demand for variety is high.

Even chip making may be vulnerable: researchers are now using off-the-shelf inkjet printers to print integrated circuits on metallic film, a process that could dramatically change the economics of this industry.

When costs are falling rapidly, and the market is growing rapidly, is often possible to overcome cost advantages via leapfrogging. Even though the largest firm may have a cost advantage at any point in time, if the market is growing at 40 percent per year, the tables can be turned very rapidly. Wordstar, and Wordperfect once dominated the word processor market; Visicalc and Lotus once dominated the spreadsheet market. Market share alone is no guarantee of success.

Christensen [1997] has emphasized the role of ``disruptive technologies:'' low cost, and, initially low quality, innovations that unseat established industry players. Examples are RAID arrays of disk drives, low cost copiers, ink-jet printers, and similar developments. Just as nature abhor a vacuum, inventors and entrepreneurs abhor a monopoly, and invest heavily in trying to invent around the blocking technology. Such investment may be deadweight loss, but it may sometimes lead to serendipitous discoveries.

For example, much money was spent trying to invent around the xerography patents. One outcome was ink jet printers. These never really competed very well with black and white xerography, but have become dramatically more cost-effective technologies than xerography for color printing.

Fourth, it should also be remembered that many declining average cost industries involve durables of one form or another. PCs and operating systems are technologically obsolete far before they are functionally obsolete. In these industries the installed base creates formidable competition for suppliers since the sellers continually have to convince their users to upgrade. The ``durable goods monopoly'' literature inspired by Coase [1972] is not just a theoretical curiosum, but is rather a topic of intense concern in San Jose and Redmond.

Finally, we should mention the pressures on price from producers of complementary products. Since the cost of an information system to the end user depends on the sum of the prices of the components, each component maker would like to see low prices for the other components. Hardware makers want cheap software and vice versa. I explore this in more depth in section 10.

In summary, although supply side economies of scale may lead to more concentrated industries, this may not be so bad for consumers as is often thought. Price discipline still asserts itself through at least four different routes.

Despite these four effects, there is still a presumption that in a mature industry that exhibits large fixed costs, equilibrium price will typically exceed marginal cost, leading to the conventional inefficiencies. See Delong and Froomkin [2001] for an extended discussion of this issue.

However, it should be remembered that, even in a static model, the correct formulation for the efficiency condition is that marginal price should equal marginal cost. If the information good (or chip, or whatever) is sold to different consumers at different prices, profit seeking behavior may well result in an outcome where users with low willingnesses to pay may end up facing very low prices, implying that efficiency losses are not substantial.

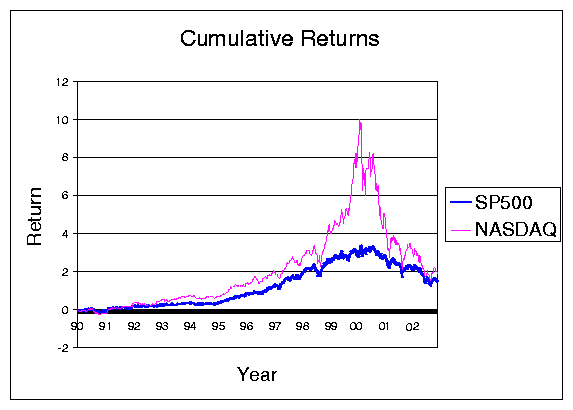

The traditional view of monopoly is that it creates deadweight loss and producer surplus, labeled DW and PS in Figure 2A. However, perfect price discrimination eliminates the deadweight loss and competition for the monopoly transfers the resulting monopoly rents to the consumers, as shown in Figure 2B.

The first and second theorems of welfare economics assert that 1) a competitive equilibrium is Pareto efficient, and 2) under certain convexity assumptions every Pareto efficient outcome can be supported as a competitive equilibrium. Under conditions of high fixed cost and low marginal cost, it is well known that a competitive equilibrium may not exist, so the first theorem is irrelevant, and the required convexity conditions may not hold, making the second welfare theorem also irrelevant.

But Figure 2B suggestions what we might call the third and fourth welfare theorems of welfare economics: 3) a perfectly discriminating monopolist can capture all surplus for itself and therefore produce Pareto efficient output, and 4) competition among perfectly discriminating monopolists will transfer this surplus to consumers, yielding the same outcome as pure competition.

These are, of course, standard observations in any intermediate microeconomics text. However, surprisingly little attention has been paid to them in the more advanced literature.

These ``theorems'' have not been precisely stated, although it is clearly possible to write down simple models where they hold. In reality price discrimination is never perfect and competition for monopoly is never costless. But then again, the assumptions for the first and second welfare theorems are not exactly satisfied in reality either.

As with the first and second welfare theorems, the third and fourth welfare theorems should be viewed as parables: under certain conditions market forces may have desirable outcomes. In particular, one should not necessarily assume that large returns to scale will necessarily result in reduced consumer welfare, particularly in environments where price discrimination is possible and competition is intense.

Even in the ideal world depicted in Figure 2, two important qualifications must be kept in mind. The first has to do with the choice of the dimensions in which to compete, the second has to do with the rules of competition.

The fourth welfare theorem assumes that the competition for the monopoly rent necessarily benefits consumers. If the strategic variables for the firms are prices, this is probably true. Other strategic choices such as innovation, quality choice, and so on also tend to benefit consumers. However, firms may also compete on other dimensions that have less benign consequences, such as political lobbying, the accumulation of excess capacity, premature entry and so on.

There is a large literature on each of these topics. One defect with this literature is the typical approach is to assume that there is a single dimension to the competition between firms: bribes to bureaucrats, prices to consumers, quality choice, entry timing, and so on. In reality, there may be many dimensions to competition,some of which are transfer payments to consumers (such as prices) some of which are transfers to third parties (such as bribes), and some of which involve pure rent dissipation (such as investment in capacity that is never used).

I believe that the choice of dimensions in which to compete has not received sufficient attention in the literature and that this is a fruitful area for future research. It also has considerable relevance for competition policy. From the viewpoint of competing for a monopoly, promotional pricing or adopting inferior technology are both costs to the firms, but they may have very important differences for consumer welfare calculations. Designing an environment in which competition results in transfers to consumers, rather than wasteful rent dissipation, is clearly an attractive policy goal.

For example, suppose that there is a resource that confers some sort of monopoly power. It may make more sense for the government to auction off this resource than to allow firms to compete for it using more wasteful currencies such as political lobbying. This, of course, has been part of the rationale for various privatization efforts in recent decades, but the lesson is more general. Another important example is compulsory licensing of intellectual property which may be attractive is there are high transactions costs to bargaining.

Competition is generally good, but some regulation may be required to make sure that competition takes socially beneficial forms. The goal of a footrace is to see who can run the fastest, not who is the most adept at tripping their opponents or rigging the clock.

Even if the currency of competition does not involve excessive waste, the form that competition takes-the rules of the game-can be critical in determining how much of the prize-the value of the monopoly-gets passed along to consumers.

A useful way to model the is to think of the monopoly as a prize to be auctioned off. Different auction forms describe different forms of competition.

Consider, for example, two makers of Enterprise Resource Planning (ERP) systems who are bidding to install their systems in Fortune 500 companies. This might reasonably be modeled as an English auction, in which the highest bidder gets the monopoly, but pays the second highest bid. If the two bidders have different costs, but are selling an identical product, the winning bidder still retains some surplus.

Alternatively, we could imagine an everyone pays auction, such as a patent race or a race to build scale. In these cases, each party has to pay, and we might assume that party who pays the most wins the monopoly.

Let v1 be the value of the prize (the monopoly) to player 1 and v2 the value to player 2, which we assume to be common knowledge. When the players are symmetric, so v1=v2, the sum of the payments by the players equals the expected value of the prize.

When players are not symmetric, the equilibrium has a more interesting structure. The player with the highest value always bids for the monopoly; but the player with the second-highest value will bid only with probability v2/v1. If v2 is small relatively to v1, then the equilibrium expected payment approaches v2/2, which is half the payment in the English auction. (See Hillman and Riley [1989] for a thorough analysis of this game and Riley [1998] for a summary.)

The difference arises because in the equilibrium strategy the player with a very low value for the monopoly often doesn't bid at all. This induces the player with the high value to shave its bid, resulting in lower auction revenues which, in our context, translates to consumers ending up with less surplus.

Yet a third example is a war of attrition in which both players compete until one drops out. Riley [1998] analyzes this game in some detail and shows that there is a continuum of equilibrium strategies. He presents an equilibrium selection argument that chooses an equilibrium where the player with the lower value drops out immediately. In this case, the player with the highest value for the monopoly wins the monopoly without having to compete at all!

Think, for example of two firms that contemplate pricing below cost in order to build market share. One firm is known to value the monopoly much more than the other, perhaps due to significantly lower production costs. In this case, it is not implausible that the firm with a lower value would give up at the outset, realizing that it would not be able to compete effectively against the other.

This could be a great deal for the winning firm-and a bad deal for the consumers since they do not benefit from the competition for the eventual monopoly.

The lesson from the ``everyone pays auction'' and the ``war of attrition'' is that if all parties have to pay to compete, you may end up with less competition and therefore less benefits passed along to consumers. An auction where only the winner pays is much better from the social point of view.

Clearly many different models of competition are possible, with different models having different implications for how the surplus is divided between consumers and firms competing for the monopoly. I've sketched out some of the possibilities, but there are many other variations (e.g., contestable markets) and I expect that this is a promising area for future research.

One final point is worth making. I have already observed that under certain conditions, the competition to acquire a price discriminating monopoly will dissipate all rents. If the dissipation involves offering heavy discounts to consumers, for example, then the gain in surplus that consumers receive in the competition phase may offset, at least to some degree, the losses incurred in the monopoly phase.

But there is clearly a time consistency problem here. Even in the ideal circumstances of the fourth welfare theorem, gains that accrue to early generations may not affect the acquiescence of later generations to monopoly power. The fact that my father got a great deal on Lotus 123 or Wordperfect may be of little solace to me when I have to pay a high price for their successors.

Demand-side economies of scale are also known as ``network externalities'' or ``network effects,'' since they commonly occur in network industries. Formally, a good exhibits network effects if the demand for the good depends on how many other people purchase it. The classic example is a fax machine; picture phones and email exhibit the same characteristic.

The literature distinguishes between ``direct network effects,'' of the sort just described, and ``indirect network effects,'' which are sometimes known as ``chicken and egg problems.'' I don't directly care whether or not you have a DVD player-that doesn't affect the value of my DVD player. However, the more people that have DVDs, the more DVD-readable content will be provided, which I do care about. So, indirectly, your DVD player purchase tends to enhance the value of my player.

Indirect network effects are endemic in high-tech products. Current challenges include residential broadband and applications, and 3G wireless and applications. In each case, the demand for the infrastructure depends on the availability of applications, and vice versa. The cure for the current slump, according to industry pundits, is a new killer app. Movies on demand, interactive TV, mobile commerce-there are plenty of candidates, but investors are wary, and for good reason: there are very substantial risks involved.

I will discuss the indirect network effects in section 10. In this section, I focus on the direct case.

I like to use the terminology ``demand side economies of scale'' since it forms a nice parallel with the classic supply side economies of scale discussed in the previous section. With supply side economies, average cost decreases with scale, while with demand side economies of scale, average revenue (demand) increases with scale. Much of the discussion in the previous section about competition to acquire a monopoly also applies in the case of demand side economies of scale.

When network effects are present, there are normally multiple equilibria. If no one adopts a network good, then it has no value, so no one wants it. If there are enough adopters, then the good becomes valuable, so more adopt it-making it even more valuable. Hence network effects give rise to positive feedback.

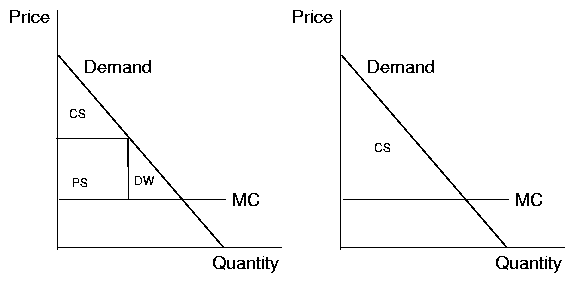

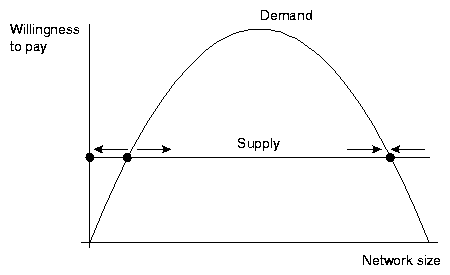

We can depict this process in a simple supply-demand diagram. The demand curve (or, more precisely, the ``fulfilled expectations demand curve'') for a network good typically exhibits the hump shape depicted in Figure 3. As the number of adopters increases the marginal willingness to pay for the good also increases due to the network externality; eventually, the demand curve starts to decline due to the usual effects of selling to consumers with progressively lower willingness to pay.

In the case depicted, with a perfectly elastic supply curve, there are three equilibria. Under the natural dynamics, which has quantity sold increasing when demand is greater than supply and decreasing when demand is less than supply, the two extreme equilibria are stable and the middle equilibrium is unstable.

Hence the middle equilibrium represents the ``critical mass.'' If the market can get above this critical mass, the positive feedback kicks in and the product zooms off to success. But if the product never reaches a critical mass of adoption, it is doomed to fall back to the stable zero-demand/zero-supply equilibrium.

Consider an industry where the price of the product-a fax machine, say-is very high, but is gradually reduced over time. As Figure 3 shows, the critical mass will then become smaller and smaller. Eventually, due to random fluctuation or due to a deliberate strategy, the sales of the product will exceed the critical mass.

Though this story is evocative, I must admit that the dynamics is rather ad hoc. It would be nice to have a more systematic derivation of dynamics in network industries. Unfortunately, microeconomic theory is notoriously weak when it comes to dynamics and there is not very much empirical work to really determine what dynamic specifications make sense. The problem is that for most network goods, the frequency of data collection is too low to capture the interesting dynamics.

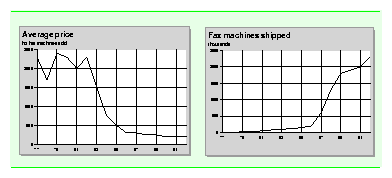

Figure 4 depicts the prices and shipments of fax machines in the U.S. during the 1980s. Note the dramatic drop in price and the contemporaneous dramatic increase in demand in the mid-eighties. This is certainly consistent with the story told above, but it is hardly conclusive. Economides and Himmelberg [1995] make an attempt to estimate a model based on these data, but, as they acknowledge, this is quite difficult to do with low-frequency time-series data.

There have been some attempts to empirically examine network models using cross sectional data. Goolsbee and Klenow [2000] examine the diffusion of home computers and find a significant effect for the influence of friends and neighbors in computer purchase decisions, even when controlling for other income, price, and demographic effects.

All these examples refer to network externalities for a competitive industry selling a compatible product: a fax machine, email, or similar product. Rohlfs [1974] was the first to analyze this case in the economics literature; he was motivated by AT&T's disastrous introduction of the PicturePhone.

Katz and Shapiro [1985],Katz and Shapiro [1986a],Katz and Shapiro [1986b],Katz and Shapiro [1992] have examined the impact of network externalities in oligopoly models in which technology adoption is a key strategic variable. Economides [1996] and Katz and Shapiro [1994] provide a useful reviews of the literature, while Rohlfs [2001] provides a history of industries in which network effects played a significant role.

Network effects are clearly prominent in some high-technology industries. Think, for example, of office productivity software such as word processors. If you are contemplating learning a word processor, it is natural to lean towards the one with the largest market share, since that will make it easy to exchange files with other users, easier to work on multi-authored documents, and easy to find help if you encounter a problem. If you are choosing an operating system, it is natural to choose the one that has the most applications of interest to you. Here the applications exhibit direct network effects and the operating system/applications together exhibit indirect network effects.

Since many forms of software also exhibit supply side increasing returns to scale, the positive feedback can be particularly strong: more sales lead to both lower unit costs and more appeal to new customers. Once a firm has established market dominance with a particular product, it can be extremely hard to unseat it.

In the context of the Microsoft antitrust case, this effect is known as the ``applications barrier to entry.'' See Gilbert and Katz [2001], Klein [2001], and Whinston [2001] for an analysis of some of these concepts in that context.

Network effects are also related to two of the forces I described earlier: price discrimination and lock-in.

When network effects are present, early adopters may value the network good less than subsequent adopters. Thus, it makes sense for sellers to offer them a lower price, a practice known as ``penetration pricing'' in this context.

Network effects also contribute to lock-in. The more people that drive on the right-hand side of the road, the more valuable it is to me to follow suit. Conversely, a decision to drive on the left-hand side of the road is most effective if everyone does it at the same time. In this case, the switching costs are due to the cost of coordination among millions of individuals, a cost that may be extremely large.

If the value of a network depends on its size, then interconnection and/or standardization becomes an important strategic decision.

Generally dominant firms with established networks or proprietary standards prefer not to interconnect. In the 1890s, the Bell System refused to allow access to its new long distance service to any competing carriers. In 1900-1912 Marconi International Marine Corporation licensed equipment, but wouldn't sell it, and refused to interconnect with other systems. In 1910-1920 Ford showed no interest in automobile industry parts standardization industry, since it was already a dominant, vertically-integrated firm. Today Microsoft has been notorious in terms of going its own way with respect to industry standards and American Online has been reluctant to allow access to its instant messaging systems.

However standards are not always anathema to dominant firms. In some cases, the benefits from standardization can be so compelling that it is worth adopting even from a purely private, profit-maximizing perspective.

Shapiro and Varian [1998a] describe why using a simple equation:

|

Besen and Farrell [1994] survey the economic literature on standards formation. They illustrate the strategic issues by focusing on a standards adoption problem with two firms championing incompatible standards, such as the Sony Betamax and VHS technologies for videotape. Each of these technologies exhibits network effects-indirect network effects in this particular example.

Following Besen and Farrell [1994] we describe the three forms of competition in standards setting.

With respect to standards wars, Besen and Farrell [1994] identify common tactics such as 1) penetration pricing to build an early lead, 2) building alliances with suppliers of complementary products, 3) expectations management such as bragging about market share or product pre-announcements, and 4) commitments to low prices in the future.

It is not hard to find examples of all of these strategies. Penetration pricing has already been described above. A nice recent example of building alliances is the DVD Forum, which successfully negotiated a standard format in the (primarily Japanese) consumer electronics industry, and worked with the film industry to ensure that sufficient content was available in the appropriate format at low prices.

Expectations management is very common; when there were two competing standards for 56 Kbs modems, each producer advertised that it had an 80 percent market share. In standards wars, there is a very real sense in which the product that people expect to win, will win. Nobody wants to be stranded with an incompatible product, so convincing potential adopters that you have the winning standard is critical.

Pre-announcements of forthcoming products are also an attractive ploy, but can be dangerous, since customers may hold off purchasing your current product in order to wait for the new product. This happened, for example, to the Osborne portable computer in the mid-eighties.

Finally, there is the low-price guarantee. When Microsoft introduced Internet Explorer it announced that it was free and would always be free. This was a signal to consumers that they would not be subject to lock-in if they adopted the Microsoft browser. Netscape countered by saying that its products would always be open. Each competitor played to its strength, but it seems that Microsoft had the stronger hand.

The standards negotiation problem is akin to the classic Battle of the Sexes game: each player prefers a standard to no standard, but each prefers its own standard to the other's.

As in any bargaining problem, the outcome of the negotiations will depend, to some extent, on the threat power of the participants-what will happen to them if negotiations break down. Thus it is common to see companies continuing to develop proprietary solutions, even while engaged in standards negotiation.

Sometime standards are negotiated under the oversight of official standards bodies, such as the International Telecommunications Union (ITU), the American National Standards Institute (ANSI), the Internet Engineering Task Force (IETF), or any of dozens of other standards-setting bodies. These bodies have the advantage of experience and authority; however, they tend to be rather slow moving. In recent years, there have been many ad hoc standards bodies that have been formed to create a single standard. The standards chosen by these ad hoc groups may not be as good as with the traditional bodies, but they are often developed much more quickly. See Libicki et al. [2000] for a description of standards setting involving the Internet and Web.

Of course, there is often considerable mistrust in standards negotiation, and for good reason. Typically participating firms are required to disclose any technologies for which they own intellectual property that may be relevant to the negotiations. Such technologies may eventually be incorporated into the final standard, but only after reaching agreements that they will be licensed on ``fair, reasonable, and non-discriminatory terms.'' But it is not uncommon to see companies fail to disclose all relevant information in such negotiations, leading to accusations of breach of faith or legal suits.

Another commonly-used tactic is for firms to cede the control of a standard to an independent third party, such as one of the bodies mentioned above. Microsoft has recently developed a computer language called C# that it hopes will be a competitor to Java. They have submitted the language the ECMA, a computer industry standards body based in Switzerland. Microsoft correctly realized that in order to convince anyone to code in C# they would have to relinquish control over the language.

However, the extent to which they have actually released control is still unclear. Babcock [2001] reports that there may be blocking patents on aspects of C#, and ECMA does not require prior disclosure of such patents, as long as Microsoft is willing to license them on non-discriminatory terms.

A typical example is where a large, established firm wants to maintain a proprietary standard, but a small upstart, or a group of small firms, wants to interconnect with that standard. In some cases, the proprietary standard may be protected by intellectual property laws. In other cases, the leader may choose to change its technology frequently to keep the followers behind. Frequent upgrades have the advantage that the leader also makes its own installed base obsolete, helping to address the durable goods monopoly problem mentioned earlier.

Another tactic for the follower is to use an adapter (Farrell and Saloner [1992]). AM and FM radio never did reach a common standard, but they peacefully co-exist in a common system. Similarly, ``incompatible'' software systems can be made to interoperate by building appropriate converters and adapters. Sometimes this is done with the cooperation of the leader, sometimes without.

For example, the open source community has been very clever in building adapters to Microsoft's standards through reverse engineering. Samba, for example, is a system that runs on Unix machines that allows them to interoperate with Microsoft networks. Similarly, there are many open source converters for Microsoft applications software such as Word and Excel.

The economic literature on standardization has tended to focus on strategic issues, but there are also considerable cost savings due to economics of scale in manufacture and risk reduction. Thompson [1954] describes the early history of the U.S. automobile industry, emphasizing these factors.

He shows that the smaller firms were interested in standardization in order to reap sufficient economies of scale to compete with Ford and G.M., who initially showed no interest in standardization efforts. Small suppliers were also interested in standardization, since that allowed them to diversify the risk associated with supplying idiosyncratic parts to a single assembler.

The Society of Automotive Engineers (SAE) carried out the standardization process, which yielded many cost advantages to the automotive industry. By the late 1920s, Ford and G.M. began to see the advantages of standardization, and joined the effort, at first focusing on the products of complementors (tires, petroleum products, and the like) but eventually playing a significant role in automobile parts standardization.

It is common in high-technology industries to see products that are useless unless they are combined into a system with other products: hardware is useless without software, DVD players are useless without content, and operating systems are useless without applications. These are all examples of complements, that is, goods whose value depends on their being used together.

Many of the examples we have discussed involve complementarities. Lock-in often occurs because users must invest in complementary products, such as training, to effectively use a good. Direct network effects are simply a symmetric form of complementarities: a fax machine is most useful if there are other fax machines. Indirect network effects or chicken-and-egg problems are also a form of systems effects. Standards involve a form of complementarity in that are often designed to allow for seamless interconnection of components (one manufacturer's DVDs will play on other manufacturer's machine.)

Systems of complements raise many important economic issues. Who will do the system integration: the manufacturer, the end user, or some intermediary, such as an OEM? How will the value be divided up among the suppliers of complementarity? How will bottlenecks be overcome, and how will the system evolve?